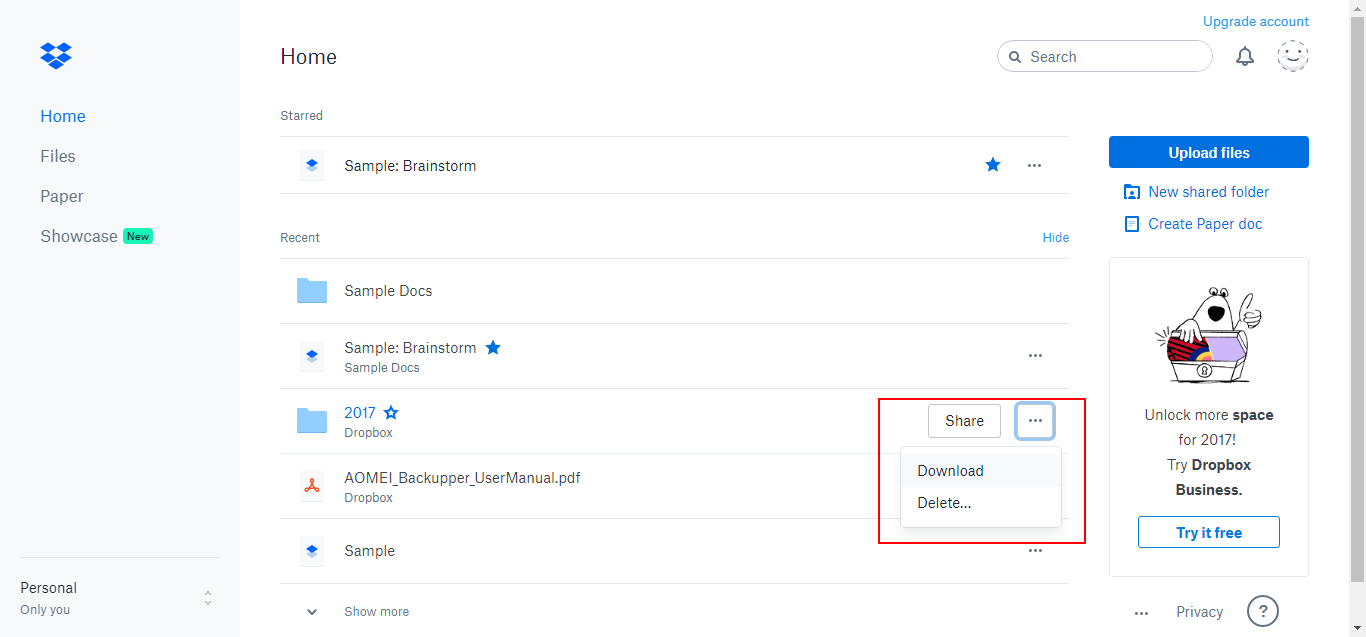

It can directly download file from the Google drive virtual path for us, we can use in the command as follows. Luckily there is a tool called Gdown ( ). Since our team using Google drive as the primary source of file sharing, we need to think the solution for it. When you turn on the Link Sharing, They usually provide us the virtual path like this. Unfortunately, in Google drive is not easy like in the Dropbox, the Google drive does not provide direct public link that allow us to fetch the file directly. # Dropbox # Dropbox # Google Colaboratory !wget -O news.csv # Terminal, Command Line $ wget -O news.csv # Google Drive We copy the public link and download it using command as follow.

The easiest way to do is, we upload our files to the Public folder in the Dropbox. It is quite difficult to upload the dataset or any CSV files into the remote instance. Since a Google Colaboratory is a GPU-enable remote compute instance running on Google Cloud. Google Colaboratory is a great tool for data science and machine learning practitioners nowsday.

0 kommentar(er)

0 kommentar(er)